Retrieval-Augmented Generation (RAG): The Future of NLP in 2025

An in-depth exploration of Retrieval-Augmented Generation (RAG), its mechanics, advantages, applications, challenges, and future in natural language processing (NLP) by 2025.

Retrieval-Augmented Generation (RAG): The Future of NLP in 2025

Introduction:

The field of Natural Language Processing (NLP) is undergoing a revolution, and at the forefront of this transformation is the concept of Retrieval-Augmented Generation (RAG). RAG is not just another algorithm; it’s a paradigm shift in how we build and use language models. In 2025, RAG is poised to become a cornerstone of many NLP applications, moving beyond the limitations of traditional generative models and ushering in a new era of accuracy, context-awareness, and adaptability.

This post will delve into the mechanics of RAG, its advantages, applications, challenges, and future trajectory. We’ll explore how this powerful technique is set to reshape our interaction with technology and information.

Understanding Retrieval-Augmented Generation (RAG): The Mechanics

At its core, RAG combines the strengths of two fundamental approaches in NLP:

- Retrieval-Based Methods: These methods involve accessing and retrieving relevant information from a vast external knowledge base. This could be a document repository, a database, or even the entire internet. The key here is the ability to quickly find and extract the most pertinent pieces of information given a specific user query.

- Generative Models: Generative models, like large language models (LLMs), are trained to create new content. They are adept at generating text, translating languages, and even coding based on patterns learned during training.

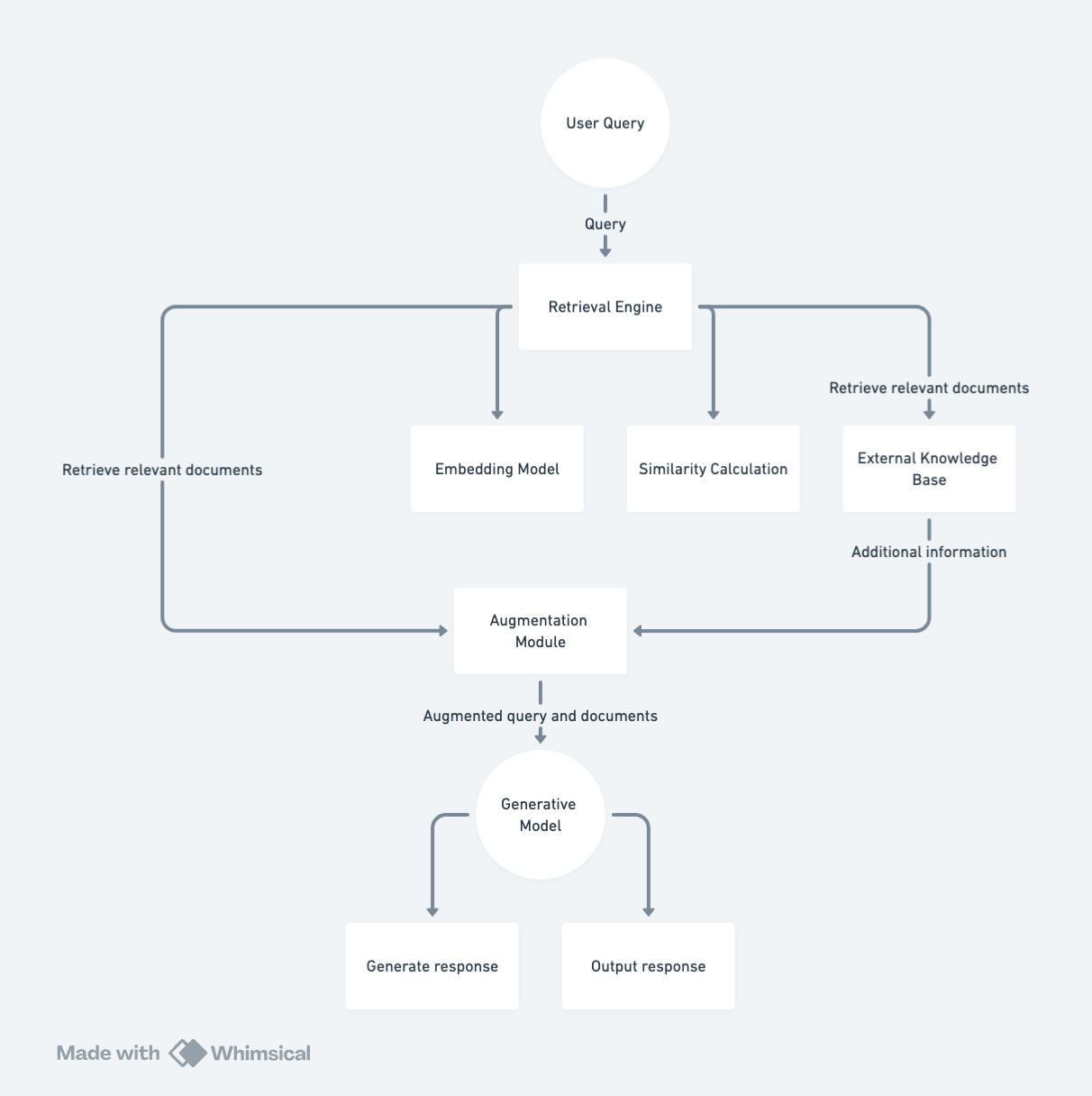

RAG brings these two components together in a cohesive workflow:

- User Input: A user submits a query or a prompt.

- Retrieval Phase: The RAG system uses a retrieval mechanism (often involving embedding models, semantic similarity calculations, and indexing) to identify and fetch relevant information from the external knowledge source. This phase essentially acts as an “information scout,” finding the most useful data for the specific query.

- Augmentation Phase: The retrieved information is added to the original user input to create a richer, context-aware prompt. This augmented prompt serves as the input for the generative model.

- Generation Phase: The generative model uses the augmented prompt to produce an accurate, contextually relevant response or content.

RAG vs. Traditional Language Models: Why RAG Is Superior

Traditional language models are trained on vast amounts of text data. While they exhibit remarkable generalization abilities, they often suffer from a few critical limitations:

- Lack of Real-Time Knowledge: They are trained on a static dataset, making them unable to access new information or respond to queries related to current events.

- Hallucinations and Factual Inaccuracies: Because they generate text based on learned patterns, they might sometimes produce nonsensical or factually incorrect outputs.

- Limited Context: The limited input context window of traditional LLMs restricts their capacity to generate complex, multi-layered responses.

RAG addresses these drawbacks effectively:

- Access to External Knowledge: RAG can draw from up-to-date information sources, making it capable of responding to contemporary queries.

- Reduced Factual Errors: Grounding generated content in retrieved information helps reduce hallucinations and improves the factual accuracy of responses.

- Enhanced Context Awareness: The augmentation step allows for richer input context, leading to more detailed and nuanced responses.

- Improved Explainability: The retrieval component offers insights into the source of information, enhancing the transparency and understandability of the system.

RAG Applications in 2025 and Beyond

By 2025, we can expect to see RAG driving innovation across a multitude of applications:

- Search Engines: RAG will allow search engines to provide more relevant, accurate, and context-aware results. Instead of just listing links, the search engine will provide direct, summarized answers based on information retrieved from various online sources.

- Customer Service Chatbots: RAG will empower chatbots to handle complex queries and provide human-like responses. The ability to access real-time product information, knowledge bases, and company policies ensures greater accuracy and customer satisfaction.

- Content Generation: RAG can significantly improve the quality of content generation, such as writing articles, reports, and scripts. By retrieving relevant factual data, the content is more robust and informative.

- Education and Research: RAG-powered tools will help students and researchers quickly find and summarize relevant information from textbooks, academic papers, and other research materials.

- Code Generation: RAG can access code documentation and libraries, enabling more robust and reliable code generation by LLMs.

- Personalized News and Information: RAG can tailor news consumption experiences by retrieving and filtering relevant content based on user interests and preferences.

Challenges and Limitations of RAG

Despite its potential, RAG is not without its challenges:

- Retrieval Quality: The overall effectiveness of RAG heavily relies on the accuracy and relevance of the retrieved information. If the information retrieval component fails, the generation will suffer.

- Computational Cost: Accessing and indexing large knowledge bases and performing retrieval can be computationally expensive.

- Data Bias: RAG can inherit biases present in the external knowledge sources. Ensuring data quality and fairness is crucial.

- Integration Complexity: Integrating retrieval and generative models into a single seamless system can be intricate and require considerable engineering expertise.

- Scalability: Scaling RAG to handle massive user volume and large datasets presents a significant technological challenge.

Future Advancements and Evolution of RAG

To fully unlock the potential of RAG, several advancements are needed:

- Improved Retrieval Mechanisms: Development of more efficient and accurate retrieval methods, utilizing semantic search and better indexing techniques.

- Dynamic Knowledge Integration: Moving beyond static knowledge bases to integrate real-time information streams and adapt to changing datasets.

- Explainable Retrieval: Providing users with transparent insights into why particular information was retrieved.

- Bias Mitigation: Implementing mechanisms to detect and mitigate biases in retrieved information, ensuring fairness and equity.

- Adaptive RAG Architectures: Creating RAG systems that can adapt to various domains and task requirements through dynamic parameter tuning.

- Edge RAG: Deploying RAG models closer to users’ devices to reduce latency and enhance privacy.

- Integration with Multi-Modal Data: Extending RAG to not just textual information but also images, audio, and video, leading to more powerful contextual understanding.

- Advanced AI Training: Using techniques like Reinforcement Learning from Human Feedback (RLHF) to further fine-tune both retrieval and generation components.

Examples in Practice

- Search Engine: A user searches for “Latest discoveries in cancer treatment.” RAG retrieves relevant research papers, news articles, and clinical trial information from various sources, then summarizes them into a coherent response, citing all sources.

- Customer Service Chatbot: A customer asks about a “return policy for electronic items.” RAG retrieves the relevant policy information from the company’s website and FAQs, then answers the query with precise instructions.

- Content Generation: A writer prompts the AI to “write an article on the history of the Roman Empire.” RAG fetches information from reputable sources, historical databases, and scholarly articles, providing the foundation for a well-informed and accurate article.

Conclusion

Retrieval-Augmented Generation represents a fundamental advancement in the field of NLP. By 2025, RAG will be instrumental in creating more accurate, reliable, and context-aware AI systems. While challenges remain, ongoing research and development are paving the way for RAG to truly transform how we interact with technology and information. The future of NLP is not just about generating text, but about creating insightful and factually sound responses that are rooted in comprehensive and dynamically updated knowledge. RAG embodies this paradigm shift and is poised to shape the future of AI in the years to come.