HDFS Tutorial: A Deep Dive into the Backbone of Big Data

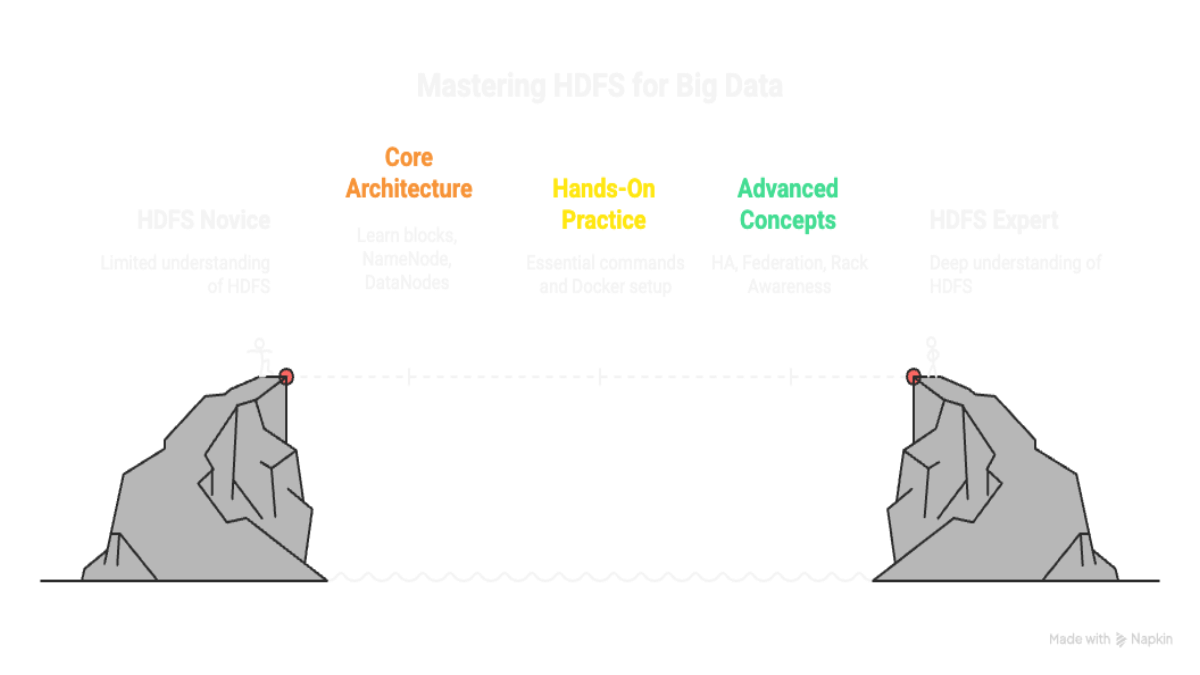

A comprehensive, hands-on tutorial for developers to understand the Hadoop Distributed File System (HDFS), covering core architecture, essential commands, and its role in the modern data stack.

Welcome to this in-depth guide to the Hadoop Distributed File System (HDFS). Before there were data lakes in the cloud, there was HDFS—the original, battle-tested storage system that made the big data revolution possible. Understanding HDFS is fundamental to grasping the principles of distributed storage and the entire Hadoop ecosystem.

This tutorial will demystify HDFS, breaking down its architecture, showing you the essential commands to manage it, and discussing its relevance in today’s cloud-native world.

Table of Contents

- What is HDFS and Why Was It Created?

- Part 1: The Core Architecture of HDFS

- Concept 1: Splitting Files into Blocks

- Concept 2: The Master-Slave Architecture - NameNode and DataNodes

- Concept 3: Replication - The Key to Fault Tolerance

- How a File Read/Write Operation Works

- Part 2: Getting Hands-On with HDFS

- Prerequisites & Easy Setup with Docker

- Essential HDFS Shell Commands (Your Cheat Sheet)

- Part 3: Advanced HDFS Concepts

- NameNode High Availability (HA) - Eliminating the Single Point of Failure

- HDFS Federation

- Rack Awareness

- HDFS Security with Kerberos

- Part 4: HDFS in the Modern Data Stack

- HDFS vs. Cloud Object Storage (like Amazon S3)

- Where Does HDFS Still Shine?

- Conclusion & Next Steps

What is HDFS and Why Was It Created?

The Hadoop Distributed File System (HDFS) is a distributed file system designed to run on commodity hardware. Unlike the file system on your laptop (like NTFS or APFS), which manages data on a single machine, HDFS manages data across a large cluster of machines.

It was created to solve two major problems that arose with “big data”:

- Storage: How do you store a file that is terabytes or petabytes in size—far too large to fit on a single hard drive?

- Resilience: What happens if one of the machines storing your data fails? How do you prevent data loss?

HDFS addresses these by splitting large files into smaller pieces and storing redundant copies across the cluster, providing immense scalability and fault tolerance. It is the storage layer of the Apache Hadoop project.

Part 1: The Core Architecture of HDFS

To understand HDFS, you need to know about three fundamental concepts: Blocks, Nodes, and Replication.

Concept 1: Splitting Files into Blocks

When you place a file into HDFS, it’s not stored as a single entity. Instead, it’s chopped up into large, fixed-size blocks. A typical block size is 128MB or 256MB.

- Why so large? A large block size minimizes the amount of metadata the system needs to track. If you have a 10TB file, it’s much more efficient to track ~80,000 blocks of 128MB than billions of tiny 4KB blocks. This design is optimized for large files and streaming data access (reading the whole file), not for random access of small files.

Concept 2: The Master-Slave Architecture - NameNode and DataNodes

HDFS has two types of daemons (services) running on the machines in the cluster:

- NameNode (The Master): This is the “brain” of the HDFS cluster. It does not store any of the actual file data. Instead, it holds all the metadata. This includes:

- The entire file system directory tree (like

lsshows you). - File permissions and ownership.

- A map of which blocks make up a file and which DataNodes store those blocks.

- Think of it as the library’s card catalog—it tells you where to find every book (data block) but doesn’t hold the books themselves. There is typically only one active NameNode in a basic cluster, making it a potential single point of failure (more on that later).

- The entire file system directory tree (like

- DataNodes (The Slaves/Workers): These are the workhorses of the cluster. Their job is simple:

- Store and retrieve data blocks when told to by the NameNode or a client.

- Periodically report their health and the list of blocks they store back to the NameNode (this is called a “heartbeat”).

- A cluster has many DataNodes, often hundreds or thousands.

Concept 3: Replication - The Key to Fault Tolerance

This is HDFS’s superpower. To protect against data loss from a machine failure, HDFS makes copies of each block and distributes them across the cluster. This is called the replication factor, which is typically 3 by default.

For every block of data:

- One copy is stored on a DataNode.

- A second copy is stored on a different DataNode within the same server rack.

- A third copy is stored on a DataNode in a different server rack.

This strategy protects against both a single disk/machine failure and a complete rack failure (e.g., if a network switch for that rack fails). If a DataNode goes offline, the NameNode detects it and automatically instructs other DataNodes to create new replicas of the blocks that were lost.

How a File Read/Write Operation Works (Simplified)

- Writing a File:

- Your client asks the NameNode, “I want to write

my_large_file.csv.” - The NameNode checks permissions and says, “Okay, for the first block, store it on DataNode 5, 12, and 23. For the second block, use DataNode 8, 15, and 31…”

- Your client writes the data directly to the specified DataNodes in a pipeline.

- Your client asks the NameNode, “I want to write

- Reading a File:

- Your client asks the NameNode, “Where can I find the blocks for

my_large_file.csv?” - The NameNode replies with a list of all blocks and the DataNodes that hold each one.

- Your client connects directly to the closest DataNode for each block to read the data in parallel. Notice the NameNode is not involved in the actual data transfer, preventing it from being a bottleneck.

- Your client asks the NameNode, “Where can I find the blocks for

Part 2: Getting Hands-On with HDFS

Prerequisites & Easy Setup with Docker

The easiest way to experiment with HDFS without a complex installation is by using Docker.

- Install Docker on your machine.

- Pull a popular pre-configured Hadoop image. The

bde2020/hadoop-baseis a great choice for a simple, single-node setup.

1

2

3

4

5

# Start a container with HDFS services running

docker run -it --name hadoop-hdfs bde2020/hadoop-base /bin/bash

# Once inside the container, start the HDFS services

$HADOOP_HOME/sbin/start-dfs.sh

You are now inside a shell with a running HDFS instance!

Essential HDFS Shell Commands (Your Cheat Sheet)

HDFS commands are very similar to Linux shell commands, but they are prefixed with hdfs dfs.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

# 1. Create a directory

# Syntax: hdfs dfs -mkdir /path/to/directory

hdfs dfs -mkdir /user

hdfs dfs -mkdir /user/chrispy

# 2. List files and directories

# Syntax: hdfs dfs -ls /path

hdfs dfs -ls /user

# Output should show the 'chrispy' directory you just created.

# 3. Put a local file into HDFS

# First, create a sample file in the local container filesystem

echo "Hello HDFS World!" > /tmp/sample.txt

# Now, copy it from local to HDFS

# Syntax: hdfs dfs -put /local/path /hdfs/path

hdfs dfs -put /tmp/sample.txt /user/chrispy/

# Verify it's there

hdfs dfs -ls /user/chrispy/

# 4. View the content of a file in HDFS

# Syntax: hdfs dfs -cat /hdfs/path/to/file

hdfs dfs -cat /user/chrispy/sample.txt

# Output: Hello HDFS World!

# 5. Get a file from HDFS back to the local filesystem

# Syntax: hdfs dfs -get /hdfs/path /local/path

hdfs dfs -get /user/chrispy/sample.txt /tmp/sample_from_hdfs.txt

# Check the local file was created

ls /tmp/

# 6. Check disk usage

# Syntax: hdfs dfs -du -h /hdfs/path (the -h makes it human-readable)

hdfs dfs -du -h /user/chrispy

# 7. Remove a file or directory

# Syntax for file: hdfs dfs -rm /path/to/file

# Syntax for directory: hdfs dfs -rm -r /path/to/directory

hdfs dfs -rm /user/chrispy/sample.txt

hdfs dfs -rm -r /user/chrispy

Part 3: Advanced HDFS Concepts

- NameNode High Availability (HA): The biggest weakness of the original HDFS was that the NameNode was a Single Point of Failure (SPOF). If it crashed, the whole cluster was unusable. Modern HDFS solves this with an Active/Standby NameNode configuration. They share edit logs via JournalNodes, and ZooKeeper manages the automatic failover if the active one dies.

- HDFS Federation: To scale horizontally, HDFS Federation allows a cluster to have multiple independent NameNodes, each managing a different part of the filesystem namespace (e.g.,

/useris managed by one,/databy another). This improves performance and isolation. - Rack Awareness: HDFS can be configured to know the physical rack location of each DataNode. The NameNode uses this information to intelligently place block replicas, ensuring that copies are spread across different racks to protect against rack-level outages.

- HDFS Security with Kerberos: In a production environment, HDFS is almost always secured using Kerberos. Kerberos provides strong authentication for users and services, ensuring that only authorized clients can access data.

Part 4: HDFS in the Modern Data Stack

HDFS vs. Cloud Object Storage (like Amazon S3)

In recent years, cloud object storage like Amazon S3, Azure Blob Storage, and Google Cloud Storage has become the default choice for data lakes in the cloud.

| Feature | HDFS | Cloud Object Storage (S3) |

|---|---|---|

| Architecture | Coupled compute & storage | Decoupled compute & storage |

| Cost | High upfront & operational cost | Pay-as-you-go, lower TCO |

| Scalability | Scalable, but requires planning | Virtually infinite, on-demand |

| API | HDFS API, POSIX-like | RESTful HTTP API (S3 API) |

| Performance | Excellent for data locality | Highly scalable throughput |

| Management | Complex to manage and maintain | Fully managed service |

The primary advantage of the cloud is the separation of compute and storage. You can spin up a massive Spark cluster to process data in S3 and then shut it down, paying only for the time it was running, while your data remains safely and cheaply in S3.

Where Does HDFS Still Shine?

Despite the rise of the cloud, HDFS is far from obsolete. It remains a critical component for:

- On-Premise Deployments: For companies that cannot or will not move their data to the public cloud due to security, compliance, or data sovereignty reasons.

- Legacy Hadoop Systems: Thousands of organizations still have massive investments in on-premise Hadoop clusters where HDFS is the storage foundation.

- Extreme Performance Needs: In scenarios where the network latency to cloud storage is a bottleneck, HDFS’s data locality (placing compute on the same nodes as the data) can still provide a performance edge.

Conclusion & Next Steps

You’ve now learned the foundational principles of the Hadoop Distributed File System! You understand its master-slave architecture, how it achieves incredible fault tolerance through replication, and you’ve even run the essential commands to manage it.

Key Takeaways:

- HDFS is built for large files and scalability on commodity hardware.

- The NameNode stores metadata, and DataNodes store the actual data blocks.

- Replication is the magic that prevents data loss.

- While cloud storage is now dominant, HDFS remains a powerful and relevant solution for on-premise big data.

Your next step is to learn about the processing frameworks that run on top of HDFS, such as Apache Spark, Apache Hive, and the original MapReduce. These tools are what unlock the true value of the data you store in HDFS.