PySpark Tutorial: The Ultimate Guide from Beginner to Advanced

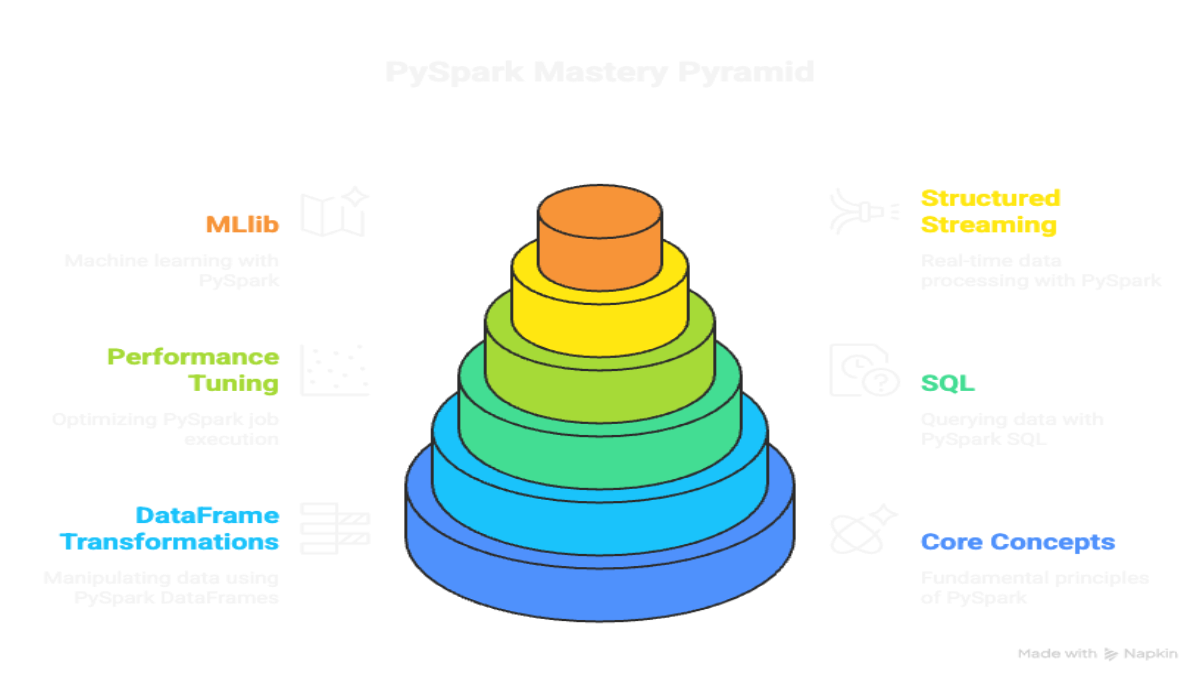

A comprehensive, hands-on tutorial for developers to master PySpark. This guide covers core concepts, DataFrame transformations, SQL, performance tuning, Structured Streaming, and MLlib with detailed explanations and examples.

Welcome to the ultimate guide to PySpark! Apache Spark is the premier engine for large-scale data processing, and its Python API, PySpark, brings its power to the familiar world of Python. This tutorial is designed to be your one-stop resource, guiding you from the fundamental building blocks to advanced, production-ready techniques.

Whether you’re a data engineer building ETL pipelines, a data scientist training models on massive datasets, or a Python developer looking to scale up, this guide has you covered. Let’s dive in and unlock the power of distributed computing.

Table of Contents

- Introduction: What is PySpark and Why is it Essential?

- Setup & Prerequisites

- Part 1: The Foundations - SparkSession, Core Concepts & DataFrames

- The SparkSession: Your Entry Point

- Core Concept 1: Lazy Evaluation & the DAG

- Core Concept 2: The DataFrame Architecture

- Creating DataFrames: From Files and In-Memory

- Inspecting Your Data:

show,printSchema,describe

- Part 2: DataFrame Mastery - Transformations & Actions

- Selecting, Renaming, and Dropping Columns

- Filtering Rows:

filter&where - Adding & Modifying Columns:

withColumn - Aggregating Data:

groupBy,agg, andpivot - Joining DataFrames: All Join Types Explained

- Handling Missing Data:

dropna&fillna - Sorting Data:

orderBy

- Part 3: Intermediate to Advanced Techniques

- Spark SQL: Unleash Your SQL Skills

- User-Defined Functions (UDFs): The Good, The Bad, and The Ugly

- Performance Tuning: Caching, Partitioning, and Broadcast Joins

- Part 4: PySpark’s Advanced Ecosystem

- Real-Time Processing with Structured Streaming

- Scalable Machine Learning with MLlib

- Reading and Writing Data: Parquet, CSV, and More

- Conclusion: Your Journey with PySpark

Introduction: What is PySpark and Why is it Essential?

Apache Spark is an open-source, distributed computing system designed for speed, scalability, and unified analytics. It processes data in parallel across a cluster of computers, achieving incredible performance by leveraging in-memory computation.

PySpark is the official Python API for Spark. It allows you to write Spark applications using Python, combining the simplicity of Python with the power of a distributed engine.

Key Advantages:

- Blazing Speed: Up to 100x faster than Hadoop MapReduce for certain applications.

- Massive Scalability: Seamlessly scales from a single laptop to a cluster of thousands of nodes.

- Unified Engine: Supports SQL, real-time data streaming, machine learning (MLlib), and graph processing under one roof.

- Pythonic API: The DataFrame API is intuitive for anyone familiar with libraries like Pandas.

Setup & Prerequisites

- Python 3.6+: The foundation of PySpark.

- Java 8/11: Spark runs on the Java Virtual Machine (JVM). You can verify with

java -version. - Installation: A simple pip install is all you need to get started locally.

1

pip install pyspark

Part 1: The Foundations - SparkSession, Core Concepts & DataFrames

The SparkSession: Your Entry Point

The SparkSession is the main entry point to all PySpark functionality. It’s how you initialize a connection to the Spark cluster.

1

2

3

4

5

6

7

8

9

10

from pyspark.sql import SparkSession

# Initialize a SparkSession

spark = SparkSession.builder \

.appName("PySparkUltimateGuide") \

.master("local[*]") \

.config("spark.driver.memory", "4g") \

.getOrCreate()

print(f"Spark is ready. Version: {spark.version}")

.appName(): A name for your application, visible in the Spark UI..master("local[*]"): Tells Spark to run locally using all available CPU cores. Ideal for development. For a cluster, this would beyarnor a Spark master URL..config(): Set various Spark configurations. Here, we’re allocating 4GB of memory to the driver..getOrCreate(): Gets an existing SparkSession or creates a new one.

Core Concept 1: Lazy Evaluation & the DAG

This is the most critical concept in Spark. Spark does not execute your commands immediately. Instead, it builds a plan of operations called a Directed Acyclic Graph (DAG).

- Transformations: These are operations that create a new DataFrame from an existing one (e.g.,

select,filter,withColumn). They are lazy, meaning they don’t execute until an action is called. - Actions: These are operations that trigger the execution of the DAG and return a value or write to an external system (e.g.,

show,count,collect,write).

This laziness allows Spark’s Catalyst Optimizer to analyze your entire data flow and create the most efficient execution plan.

Core Concept 2: The DataFrame Architecture

A PySpark DataFrame is a distributed, immutable collection of data organized into named columns.

- Distributed: The data is split into partitions, which are processed in parallel across different nodes in the cluster.

- Immutable: You cannot change a DataFrame. Transformations create a new DataFrame. This ensures data consistency and fault tolerance.

Creating DataFrames: From Files and In-Memory

Let’s create a employees.csv file:

id,name,department,salary,age,start_date

1,Alice,HR,60000,34,2020-01-15

2,Bob,Engineering,85000,41,2018-05-20

3,Charlie,Engineering,95000,38,2019-09-01

4,David,Sales,73000,29,2021-03-10

5,Eve,Sales,68000,45,2017-11-28

6,Frank,HR,55000,31,2022-02-05

7,Grace,,78000,52,2016-07-19

1

2

3

4

5

# Create DataFrame by reading a CSV

df = spark.read.format("csv") \

.option("header", "true") \

.option("inferSchema", "true") \

.load("employees.csv")

inferSchemais convenient but can be slow for large files. For production, it’s better to define the schema explicitly for performance and reliability.

Inspecting Your Data

These are actions that trigger computation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Show the DataFrame's schema

print("DataFrame Schema:")

df.printSchema()

# Show the first 5 rows

print("Top 5 rows:")

df.show(5, truncate=False) # truncate=False prevents cutting off long strings

# Get a statistical summary of numerical columns

print("Statistical Summary:")

df.describe().show()

# Get the number of rows

print(f"Total number of rows: {df.count()}")

Part 2: DataFrame Mastery - Transformations & Actions

Let’s explore the most common DataFrame methods. Remember, these are transformations and are lazily evaluated.

Selecting, Renaming, and Dropping Columns

1

2

3

4

5

6

7

8

9

10

11

12

13

from pyspark.sql.functions import col

# Select specific columns

df.select("name", "salary").show(3)

# Use the col() function for more complex expressions

df.select(col("name"), (col("salary") / 12).alias("monthly_salary")).show(3)

# Rename a column

df_renamed = df.withColumnRenamed("department", "dept")

# Drop columns

df_dropped = df.drop("start_date", "age")

Filtering Rows: filter & where

filter() and where() are aliases for the same operation.

1

2

3

4

5

6

7

8

# Filter for engineers

df.filter(col("department") == "Engineering").show()

# Filter for employees with salary > 70000

df.filter("salary > 70000").show() # You can use SQL-like strings

# Multiple conditions

df.filter((col("department") == "Sales") & (col("age") > 40)).show()

Adding & Modifying Columns: withColumn

This is the primary way to add or update columns.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

from pyspark.sql.functions import lit, year, current_date

# Add a bonus column (10% of salary)

df_with_bonus = df.withColumn("bonus", col("salary") * 0.1)

# Add a static column

df_with_country = df_with_bonus.withColumn("country", lit("USA"))

# Derive a column from another (years of service)

df_with_service_years = df_with_country.withColumn(

"years_of_service",

(year(current_date()) - year(col("start_date")))

)

df_with_service_years.show()

Aggregating Data: groupBy, agg, and pivot

Aggregations summarize your data.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

from pyspark.sql.functions import avg, sum, count, max, min, countDistinct

# Calculate average salary and max age per department

dept_agg = df.groupBy("department").agg(

avg("salary").alias("avg_salary"),

max("age").alias("max_age"),

count("*").alias("num_employees")

)

dept_agg.show()

# Pivot the data to see the sum of salaries for each department by age group

from pyspark.sql.functions import when

df_age_group = df.withColumn(

"age_group",

when(col("age") < 35, "Young").otherwise("Senior")

)

df_age_group.groupBy("age_group").pivot("department", ["HR", "Sales", "Engineering"]).sum("salary").show()

Joining DataFrames: All Join Types Explained

Let’s create a departments.csv file for joining:

dept_name,manager

HR,John Doe

Engineering,Jane Smith

Sales,Peter Jones

Finance,Sam Brown

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

dept_df = spark.read.csv("departments.csv", header=True)

# Join Types Explained:

# inner: (Default) Only rows with matching keys in both DataFrames.

# left / left_outer: All rows from the left DataFrame, and matched rows from the right. Null if no match.

# right / right_outer: All rows from the right DataFrame, and matched rows from the left. Null if no match.

# full / full_outer: All rows from both DataFrames. Nulls on the side that does not have a match.

# left_semi: Keeps only rows from the left DataFrame that have a match in the right. Like a filter.

# left_anti: Keeps only rows from the left DataFrame that do NOT have a match in the right.

# Example: Left join to see all employees and their managers, if any.

employee_manager_df = df.join(

dept_df,

df.department == dept_df.dept_name,

"left"

)

employee_manager_df.select("id", "name", "department", "manager").show()

Handling Missing Data: dropna & fillna

Our department column for Grace is null.

1

2

3

4

5

6

7

8

9

10

11

# Drop rows where any column is null

df.dropna().show()

# Drop rows where specific columns ('department') are null

df.dropna(subset=["department"]).show()

# Fill nulls with a specific value

df.fillna("Unknown", subset=["department"]).show()

# Fill nulls in multiple columns with different values

df.fillna({"department": "Unassigned", "salary": 0}).show()

Sorting Data: orderBy

1

2

3

4

5

6

7

from pyspark.sql.functions import desc, asc

# Sort by salary in descending order

df.orderBy(desc("salary")).show()

# Sort by department (ascending) then by age (descending)

df.orderBy("department", desc("age")).show()

Part 3: Intermediate to Advanced Techniques

Spark SQL: Unleash Your SQL Skills

You can run SQL queries directly against your DataFrames.

1

2

3

4

5

6

7

8

9

10

11

# Register the DataFrame as a temporary table

df.createOrReplaceTempView("employees_table")

# Run a SQL query

high_earners = spark.sql("""

SELECT name, department, salary

FROM employees_table

WHERE salary > 80000 AND department = 'Engineering'

ORDER BY salary DESC

""")

high_earners.show()

User-Defined Functions (UDFs)

UDFs let you apply custom Python logic, but they come with a performance cost. Use built-in functions whenever possible. Spark cannot optimize UDFs, and they require moving data between the JVM and a Python process.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

from pyspark.sql.functions import udf

from pyspark.sql.types import StringType

# 1. Define a Python function

def get_experience_level(start_date):

years = 2025 - start_date.year # Simplified logic for example

if years > 5:

return "Experienced"

elif years > 2:

return "Intermediate"

else:

return "Junior"

# 2. Register it as a UDF with its return type

experience_udf = udf(get_experience_level, StringType())

# 3. Apply it

df.withColumn("experience_level", experience_udf(col("start_date"))).show()

Performance Tuning: Caching, Partitioning, and Broadcast Joins

- Caching (

.cache()): If you reuse a DataFrame multiple times, caching it in memory can provide a massive speedup by avoiding recomputation.1 2 3 4

df_filtered = df.filter(col("salary") > 70000).cache() print(df_filtered.count()) # First action: computes and caches print(df_filtered.groupBy("department").count().show()) # Second action: reads from cache df_filtered.unpersist() # Release the memory

- Partitioning (

.repartition(),.coalesce()): The number of partitions determines the level of parallelism.repartition(n): Use to increase or decrease partitions. It involves a full data shuffle, which is expensive. Often used after a filter that dramatically reduces data size to increase parallelism.coalesce(n): Only used to decrease the number of partitions. It avoids a full shuffle and is more efficient. A common use isdf.coalesce(1).write...to save output as a single file.

- Broadcast Joins: When joining a large DataFrame with a small one, you can “broadcast” the small DataFrame to every node in the cluster. This avoids a massive, expensive shuffle of the large DataFrame. Spark often does this automatically, but you can force it.

1 2 3 4

from pyspark.sql.functions import broadcast # dept_df is small. Broadcast it. df.join(broadcast(dept_df), df.department == dept_df.dept_name, "inner").show()

Part 4: PySpark’s Advanced Ecosystem

Real-Time Processing with Structured Streaming

Structured Streaming treats a real-time data feed as a continuously appended table. You use the same DataFrame API!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# Simulate a stream by reading CSVs from a directory

# As you drop new CSV files into 'stream_input/', Spark will process them

streaming_df = spark.readStream.schema(df.schema).csv("stream_input/")

# Group by department and count

department_counts = streaming_df.groupBy("department").count()

# Write the output to the console

query = department_counts.writeStream \

.outputMode("complete") \

.format("console") \

.start()

query.awaitTermination()

Scalable Machine Learning with MLlib

MLlib provides a set of tools for building ML pipelines on large datasets. The core concepts are:

- Transformer: An algorithm that can transform one DataFrame into another (e.g., a feature scaler).

- Estimator: An algorithm that can be

fit()on a DataFrame to produce a Transformer (e.g., a linear regression model). - Pipeline: Chains multiple Transformers and Estimators together into a single workflow.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

from pyspark.ml.feature import VectorAssembler

from pyspark.ml.regression import LinearRegression

from pyspark.ml import Pipeline

# 1. Feature Engineering: Combine features into a single vector

assembler = VectorAssembler(inputCols=["age"], outputCol="features")

# 2. Model Definition (Estimator)

lr = LinearRegression(featuresCol="features", labelCol="salary")

# 3. Pipeline

pipeline = Pipeline(stages=[assembler, lr])

# 4. Train the model

train_data, test_data = df.dropna().randomSplit([0.8, 0.2], seed=123)

model = pipeline.fit(train_data)

# 5. Make predictions

predictions = model.transform(test_data)

predictions.select("age", "salary", "prediction").show()

Reading and Writing Data: Parquet, CSV, and More

Saving your processed data is crucial. Parquet is the preferred format for Spark because it’s a columnar, compressed format that Spark can read extremely efficiently.

1

2

3

4

5

6

7

8

# Save DataFrame as Parquet files (best practice)

dept_agg.write.mode("overwrite").parquet("output/dept_summary.parquet")

# Read the Parquet file back

read_parquet = spark.read.parquet("output/dept_summary.parquet")

# Save as a single CSV file for external tools

dept_agg.coalesce(1).write.mode("overwrite").option("header", "true").csv("output/dept_summary.csv")

mode()specifies the behavior if the data already exists:"overwrite": Replace existing data."append": Add new data."ignore": Do nothing if data exists."errorifexists": (Default) Throw an error.

Conclusion: Your Journey with PySpark

Congratulations on completing this extensive tour of PySpark! You’ve moved from the core concepts of lazy evaluation and DataFrames to mastering transformations, aggregations, joins, and advanced topics like performance tuning, streaming, and machine learning.

The key to mastering PySpark is practice. Apply these concepts to your own datasets, explore the rich set of functions in pyspark.sql.functions, and consult the official PySpark Documentation to continue your learning. Happy data crunching